In recent years, cloud technology are widespread, and the number of users is growing. An increasing number of companies offering cloud storage. Naturally, in these conditions and increased competition between service providers. Customers are increasingly paying attention to the nuances, which paid no attention to before. Such nuances belong to the technical features of the hardware platform.

Providers of cloud data storage often placed in open sources of information about what is hardware and technological solutions they use. Meanwhile, lack of attention to the hardware components of cloud infrastructure often leads to performance problems: the actual performance of the system can be significantly (by 40 - 60%) stated below.

Intel, the past few years have made a significant contribution to the development of cloud computing, the program starts the Intel ® Cloud Technology. The purpose of this program is to make the market cloud services as transparent as possible. It involved 16 companies from around the world: USA, France, Mexico, India, the Czech Republic and others. All of them use in their activities solutions Intel's cloud infrastructure. By the Intel logo Powered ® Cloud Technology, posted on the websites of these companies is a testament to the high quality of their services.

Based on Intel technology Services Company implemented "Cloud Server" and "cloud storage". Using the latest hardware technology solutions Intel can achieve high productivity, provide a high level of reliability and fault tolerance.

Cloud Data Services

My Blog Belongs to Cloud Data Services Information and Cloud Data Services Reviews.

Thursday, October 8, 2015

Cloud Data Center - How does it Works.

Fault tolerance in our data centers provide additional equipment, duplicating the function of vital devices, providing two essential for life: the supply of electricity and cooling to accommodate the equipment.

Cooling

Let's start with the story of refrigeration and air conditioning. In the data center, used precision (from Eng. Precision - accuracy) air conditioning, used to operate the traditional scheme of functioning of the "chiller-fan coil" and provide permanent cooling of the server room.

This air conditioning system in which the coolant between the central chiller (chiller) and cooling units (fan coil) is chilled fluid (coolant), circulating at a relatively low pressure.

Also chillers and fan coil units in the system includes a pump station (hydronic), a subsystem of automatic control and pipework between them. The greatest burden falls on the summer season, when the difference between ambient temperature and the temperature inside the server maximum. At other times, the system uses the technology of "free cooling", which uses a low temperature environment for natural cooling with minimal load on the chillers. Such technologies are actively using the largest corporations in their data centers - for example, Microsoft makes the most of them in the data center, located in the cool climate of Ireland (Dublin). Very interesting photo report can be viewed at the link.

The pumping station is an important component of the system. Here are working around the clock pumps that fed continuously flows from the refrigerant chillers to fan coil units.

Redundancy means that for the system need to work at least two pumps. We installed three pumps that work in shifts. Every 10 hours of running the pump off, and instead launched an idle pump.

This ensures even during operation and in case of failure of one of the pumps, it does not affect the operation of the system. System engineers of our data centers during daily rounds sure to check the condition of pumps and control readings of their work. To monitor the health of chillers have derived a separate hardware control panel cooling system, for which the room is being monitored.

We use classic layout configuration server cabinets, forming two climatic zones in the server room. Two rows of struts disposed to the front of each other. Cool air enters from the raised floor, and the servers take it from there. This climate zone is called the "cold corridor". The temperature in this zone is +20 ± 2 ° C.

The air is hot during the operation of servers, is released into the space behind racks where the so-called "hot" corridor. There are fan coils, aspirating hot air for cooling.

Timely information about the temperatures in the "hot" and "cold" enters the corridors around the clock duty system engineer to update interval of 30 seconds.

If the temperature is out of range, an alarm is heard. During rounds engineers measure the temperature of equipment contactless laser thermometers. If we find that the client equipment overheats, we immediately inform the client about this, pointing logged temperature.

Cooling

Let's start with the story of refrigeration and air conditioning. In the data center, used precision (from Eng. Precision - accuracy) air conditioning, used to operate the traditional scheme of functioning of the "chiller-fan coil" and provide permanent cooling of the server room.

This air conditioning system in which the coolant between the central chiller (chiller) and cooling units (fan coil) is chilled fluid (coolant), circulating at a relatively low pressure.

Also chillers and fan coil units in the system includes a pump station (hydronic), a subsystem of automatic control and pipework between them. The greatest burden falls on the summer season, when the difference between ambient temperature and the temperature inside the server maximum. At other times, the system uses the technology of "free cooling", which uses a low temperature environment for natural cooling with minimal load on the chillers. Such technologies are actively using the largest corporations in their data centers - for example, Microsoft makes the most of them in the data center, located in the cool climate of Ireland (Dublin). Very interesting photo report can be viewed at the link.

The pumping station is an important component of the system. Here are working around the clock pumps that fed continuously flows from the refrigerant chillers to fan coil units.

Redundancy means that for the system need to work at least two pumps. We installed three pumps that work in shifts. Every 10 hours of running the pump off, and instead launched an idle pump.

This ensures even during operation and in case of failure of one of the pumps, it does not affect the operation of the system. System engineers of our data centers during daily rounds sure to check the condition of pumps and control readings of their work. To monitor the health of chillers have derived a separate hardware control panel cooling system, for which the room is being monitored.

We use classic layout configuration server cabinets, forming two climatic zones in the server room. Two rows of struts disposed to the front of each other. Cool air enters from the raised floor, and the servers take it from there. This climate zone is called the "cold corridor". The temperature in this zone is +20 ± 2 ° C.

The air is hot during the operation of servers, is released into the space behind racks where the so-called "hot" corridor. There are fan coils, aspirating hot air for cooling.

Timely information about the temperatures in the "hot" and "cold" enters the corridors around the clock duty system engineer to update interval of 30 seconds.

If the temperature is out of range, an alarm is heard. During rounds engineers measure the temperature of equipment contactless laser thermometers. If we find that the client equipment overheats, we immediately inform the client about this, pointing logged temperature.

Wednesday, October 7, 2015

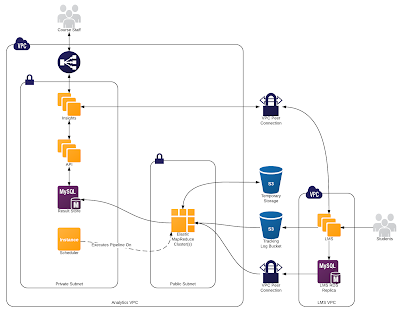

Working with the API of the Virtual Private Cloud

Continuing a series of articles about the new service virtual private cloud. Today we tell about the OpenStack API, and show how you can interact with it in console with customers.

Create User

Before starting work with the API, you need to create a new account and add it to the project. In the virtual private cloud, select Users

The list is still empty. Click on the button Create User in the window that opens, enter the name and once again click on the button Create. Password to access the panel will be generated automatically.

View properties for a new user by clicking on the icon next to its name in the list

Click on Add to Project and select from the displayed list of projects which will have access created.

The user will be displayed in the control panels of these projects. Next to the name of the user will be shown a link where you can get access to the resources of the project through a browser

Click on this link and enter the project created user account. After that, go to the tab Access and download RC-file (it is a script by which console clients can authenticate to Identity API v3).

Install Software

To configure the system to work with the project need to install additional software. In this article, we present the installation instructions for OC Ubuntu 14.04. For other operating system commands can be different; with instructions for the OS Debian 7.0 and CentOS 6.5 can be viewed directly in the control panel (the Access).

Create User

Before starting work with the API, you need to create a new account and add it to the project. In the virtual private cloud, select Users

The list is still empty. Click on the button Create User in the window that opens, enter the name and once again click on the button Create. Password to access the panel will be generated automatically.

View properties for a new user by clicking on the icon next to its name in the list

Click on Add to Project and select from the displayed list of projects which will have access created.

The user will be displayed in the control panels of these projects. Next to the name of the user will be shown a link where you can get access to the resources of the project through a browser

Click on this link and enter the project created user account. After that, go to the tab Access and download RC-file (it is a script by which console clients can authenticate to Identity API v3).

Install Software

To configure the system to work with the project need to install additional software. In this article, we present the installation instructions for OC Ubuntu 14.04. For other operating system commands can be different; with instructions for the OS Debian 7.0 and CentOS 6.5 can be viewed directly in the control panel (the Access).

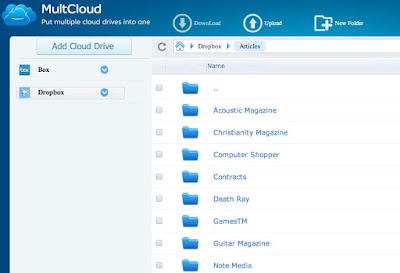

Single Page Application Cloud Storage.

We already wrote about how our cloud storage can be used as the platform for static sites (1 and 2). Today we tell about how you can store on the basis of postmodern Sites, which are based on popular and relevant today approach Single Page Application (SPA).

SPA's Approach

SPA abbreviation stands for «single page application» («one-page application). In a narrow sense, it is used to denote a single-site, directly executed on the client side in the browser. In a broader sense, SPA (sometimes also used the abbreviation SPI - «single page interface») means the whole approach to web development, which today is becoming more widespread. What is the meaning of this approach, and why it is becoming more popular

We start from a somewhat remote. Today, the most common type of sites are, of course, dynamic sites in which the generation of pages is carried out on the server side. With all the obvious convenience for developers (each request creates a page from scratch), such sites often pose a real headache for customers.

This is not a complete list of inconveniences with which they have to face

• Even despite the fact that some frameworks allow to return in response to a form filled with data, the entered customer the last time, the need to reload the page for the validation does work with a site uncomfortable.

• Generation of pages on the server side is associated with serious workloads.

Often described problems are solved this way the page is still generated on the server side, but on the client side JavaScript-run small scripts that can be used, for example, perform validation form before it is sent to the server.

The decision at first sight is quite good, but it has disadvantages

• If before the frontend and backend functions are clearly separated (backend is responsible for generating the view and logic, and front end of the display), it is now duplicated on the front end logic that can hardly be called a good architectural practice.

• The code that is responsible for the generation of view, have to constantly duplicate and because of this there are problems, kopipasta discrepancy markup broken selectors complexity maintainable code, etc.

Of course, to live with all of this possible. But the approach SPA in many cases much more efficient.

From the standpoint of this approach site is not understood as a set of pages as a set of conditions and the same HTML-pages. If you change the status occurs asynchronously uploading new content without reloading the page itself.

SPA is not a site in the classic sense, and an application that runs on the client side in the browser. Therefore, from the point of view of the user problems with the speed of work almost never, even at a slow or unstable Internet connection (for example, when viewing the site from a mobile device). High speed operation is ensured even and because the server to the client is no longer comes counting and the data (mainly in JSON format), and their size is small.

Recently, a lot of interesting technology solutions and services, greatly simplifying the creation and use of the SPA. Let us consider some of them in more detail.

BAAS (Backend as a Service)

Technologies that render the interface on the client and server communicate only by sending requests without reloading the page, there is already a long time. For example, API XMLHttpRequest appeared more in 2000. But the use of these technologies have been difficult it was necessary to rewrite the backend that implements the functions of authorization requests and data access.

Today everything has become much easier thanks to the emergence of numerous BaaS-services. Abbreviation BaaS means Backend as a Service. BaaS-services provide Web application developers ready server infrastructure (located usually in the cloud). Thanks to them, you can save time (and often more money) to write server-side code and focus on the improvement of the application and development of its functionality. With BaaS can connect to an application or any backend site with any set of functions - simply add to the page corresponding to the library.

As an example, the service MongoLab, allowing you to connect to the Web applications and sites cloud database MongoDB.

Another interesting example - FireBase. This service is a cloud-based database and API for realtime-applications. Use it, in particular, to organize the exchange of data between the client and the server in real-time just connect to the page JavaScript-library and set up the event to change the data. Based FireBase easily implemented, for example, chat or feed of user activity.

SPA's Approach

SPA abbreviation stands for «single page application» («one-page application). In a narrow sense, it is used to denote a single-site, directly executed on the client side in the browser. In a broader sense, SPA (sometimes also used the abbreviation SPI - «single page interface») means the whole approach to web development, which today is becoming more widespread. What is the meaning of this approach, and why it is becoming more popular

We start from a somewhat remote. Today, the most common type of sites are, of course, dynamic sites in which the generation of pages is carried out on the server side. With all the obvious convenience for developers (each request creates a page from scratch), such sites often pose a real headache for customers.

This is not a complete list of inconveniences with which they have to face

• Even despite the fact that some frameworks allow to return in response to a form filled with data, the entered customer the last time, the need to reload the page for the validation does work with a site uncomfortable.

• Generation of pages on the server side is associated with serious workloads.

Often described problems are solved this way the page is still generated on the server side, but on the client side JavaScript-run small scripts that can be used, for example, perform validation form before it is sent to the server.

The decision at first sight is quite good, but it has disadvantages

• If before the frontend and backend functions are clearly separated (backend is responsible for generating the view and logic, and front end of the display), it is now duplicated on the front end logic that can hardly be called a good architectural practice.

• The code that is responsible for the generation of view, have to constantly duplicate and because of this there are problems, kopipasta discrepancy markup broken selectors complexity maintainable code, etc.

Of course, to live with all of this possible. But the approach SPA in many cases much more efficient.

From the standpoint of this approach site is not understood as a set of pages as a set of conditions and the same HTML-pages. If you change the status occurs asynchronously uploading new content without reloading the page itself.

SPA is not a site in the classic sense, and an application that runs on the client side in the browser. Therefore, from the point of view of the user problems with the speed of work almost never, even at a slow or unstable Internet connection (for example, when viewing the site from a mobile device). High speed operation is ensured even and because the server to the client is no longer comes counting and the data (mainly in JSON format), and their size is small.

Recently, a lot of interesting technology solutions and services, greatly simplifying the creation and use of the SPA. Let us consider some of them in more detail.

BAAS (Backend as a Service)

Technologies that render the interface on the client and server communicate only by sending requests without reloading the page, there is already a long time. For example, API XMLHttpRequest appeared more in 2000. But the use of these technologies have been difficult it was necessary to rewrite the backend that implements the functions of authorization requests and data access.

Today everything has become much easier thanks to the emergence of numerous BaaS-services. Abbreviation BaaS means Backend as a Service. BaaS-services provide Web application developers ready server infrastructure (located usually in the cloud). Thanks to them, you can save time (and often more money) to write server-side code and focus on the improvement of the application and development of its functionality. With BaaS can connect to an application or any backend site with any set of functions - simply add to the page corresponding to the library.

As an example, the service MongoLab, allowing you to connect to the Web applications and sites cloud database MongoDB.

Another interesting example - FireBase. This service is a cloud-based database and API for realtime-applications. Use it, in particular, to organize the exchange of data between the client and the server in real-time just connect to the page JavaScript-library and set up the event to change the data. Based FireBase easily implemented, for example, chat or feed of user activity.

Tuesday, October 6, 2015

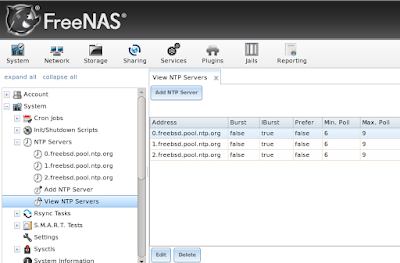

NTP Settings on the Cloud Server.

Operating systems, there are many services that the normal functioning of which depends on the accuracy of the system clock. If the server does not set the exact time, it can cause various problems.

For example, the local network is needed to watch machines sharing files synchronized - otherwise it will be impossible to establish the correct file modification time. This in turn can cause version conflicts or rewriting of important data.

If the server does not set the exact time, there are problems with jobs Cron - it is unclear when it will start. It will be very difficult to analyze system event logs for reasons diagostiki failures and faults...

You can continue for long...

To avoid all the problems described above, you need to configure the synchronization of the system clock. On Linux it uses the protocol NTP (Network Time Protocol). In this article we describe in detail how to carry out the server installation and configuration of NTP. Start with a small theoretical introduction.

How does the NTP protocol

The NTP protocol is based on the hierarchical structure of time servers, which are allocated different levels (Eng. Strata). By level 0 are reference clock (atomic clock or a watch GPS). On the ground level NTP-servers are down.

With reference clock synchronized NTP-tier servers that are sources for servers Servers level 2. Level 2 is synchronized with the server level 1, but can also be synchronized with each other. Similarly, the server is running Level 3 and below. It totals up to 256 levels.

The hierarchical structure of the NTP protocol is characterized by fault tolerance and redundancy. In the case of failures connection with upstream servers backup servers take over the synchronization process. Due to redundancy provides continuous availability of NTP-servers. Synchronizing with multiple servers, NTP uses data from all sources to get the most accurate time.

Installing and configuring the NTP-Server

The most famous and popular software tool for time synchronization is a daemon ntpd. Depending on the settings specified in the configuration file (this will be discussed below), it can act as a server and as a client (i.e., can both take time from remote hosts, and distribute it to other hosts). Below, we describe in detail how to implement the installation and configuration of the demon in the OC Ubuntu.

For example, the local network is needed to watch machines sharing files synchronized - otherwise it will be impossible to establish the correct file modification time. This in turn can cause version conflicts or rewriting of important data.

If the server does not set the exact time, there are problems with jobs Cron - it is unclear when it will start. It will be very difficult to analyze system event logs for reasons diagostiki failures and faults...

You can continue for long...

To avoid all the problems described above, you need to configure the synchronization of the system clock. On Linux it uses the protocol NTP (Network Time Protocol). In this article we describe in detail how to carry out the server installation and configuration of NTP. Start with a small theoretical introduction.

How does the NTP protocol

The NTP protocol is based on the hierarchical structure of time servers, which are allocated different levels (Eng. Strata). By level 0 are reference clock (atomic clock or a watch GPS). On the ground level NTP-servers are down.

With reference clock synchronized NTP-tier servers that are sources for servers Servers level 2. Level 2 is synchronized with the server level 1, but can also be synchronized with each other. Similarly, the server is running Level 3 and below. It totals up to 256 levels.

The hierarchical structure of the NTP protocol is characterized by fault tolerance and redundancy. In the case of failures connection with upstream servers backup servers take over the synchronization process. Due to redundancy provides continuous availability of NTP-servers. Synchronizing with multiple servers, NTP uses data from all sources to get the most accurate time.

Installing and configuring the NTP-Server

The most famous and popular software tool for time synchronization is a daemon ntpd. Depending on the settings specified in the configuration file (this will be discussed below), it can act as a server and as a client (i.e., can both take time from remote hosts, and distribute it to other hosts). Below, we describe in detail how to implement the installation and configuration of the demon in the OC Ubuntu.

Subscribe to:

Comments (Atom)